Face integration guide

The purpose of the Integration Guide is to give developers everything they need to set up and work with a minimally viable application using the Capture SDK.

Introduction

The CaptureSDK is targeted to developers who want to use IDEMIA technologies within their mobile apps.

The main features are:

Biometric captures

Biometric coding

Fingerprint capture and matching

Biometric authentication and identification

Identity documents reading

Adding the SDK to your project

Gradle

Configure repository:

XMLbuildscript { repositories { maven { url "$repositoryUrlMI" credentials { username "$artifactoryUserMI" password "$artifactoryPasswordMI" } } ... } ...}repositoryUrlMI: Mobile Identity artifactory repository url

artifactoryUserMI: Mobile Identity artifactory username

artifactoryPasswordMI: Mobile Identity artifactory password

These properties can be obtained through Experience Portal(My Identity Proofing -> Access) and should be stored in local gradle.properties file. In such case credentials will not be included in source code. Configuration of properties:

XMLartifactoryUserMI=artifactory_userartifactoryPasswordMI=artifactory_credentialsrepositoryUrlMI=https://mi-artifactory.otlabs.fr/artifactory/smartsdk-android-localMore about gradle properties can be found here.

For biometric features the dependency is:

Groovyimplementation("morpho.mph_bio_sdk.android:SmartBio:version")For document features the dependency is:

Groovyimplementation("morpho.mph_bio_sdk.android:SmartDoc:version")For all features the dependency is:

Groovyimplementation("morpho.mph_bio_sdk.android:SmartSDK:version")Version: artifact version

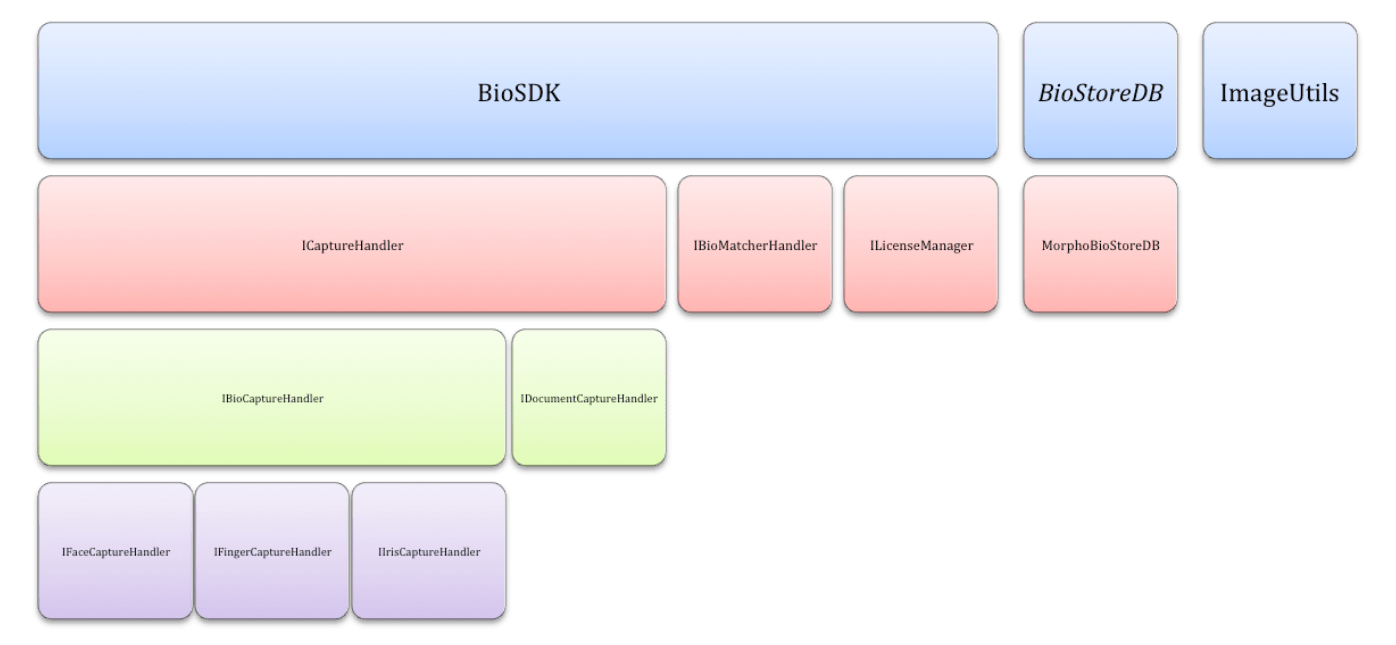

Components

The SDK comprises five distinct components:

BioCaptureHandler: Handles the capture of the biometrics through the camera of the device.

BioMatcherHandler: Handles the biometric coding and matching.

DocumentCaptureHandler (see: DocumentCaptureHandler): Handles the document reading features (like reading MRZ documents).

BioStoreDB: Repository to store biometric templates. (This component is optional, in case you don't want to implement your own database.)

ImageUtils: Handles the image format conversion, in case the integrator must change the image format or import an image.

LicenseManager: Handles the license management. Refer to License Manager for more details.

Access to BioCaptureHandler, BioMatcherHandler and DocumentCaptureHandler is through the Biometric Capture SDK entry points.

Design considerations

User permissions must be handled by the integrator. You must check that the app permissions are granted by the user if the Android version is higher than 23 (as detailed here).

Remember: You must always have a valid license before using any method of this SDK. You can activate it through

LicenseManager. Refer to License Manager for more details.Note: If your app is to run in low memory devices, you must add

android:largeHeap="true"to your application.If you find that your project requires other native libraries, you must add in your gradle.properties file the following filter:

XMLandroid.useDeprecatedNdk=trueAnd in your build.gradle add filters for the desired ABI. For now, the SDK supports armeabi-v7a and arm64-v8a:

XMLdefaultConfig { .... ndk.abiFilters 'armeabi-v7a','arm64-v8a' }Prerequisites

Skills required

The integration tasks should be done by developers with knowledge of:

Android Studio

Java for Android

Android OS

Resources required

Integration may be performed on computers running Windows, Linux, or macOS.

The tools required are:

Android Studio

Android SDK tools: preferred latest version

JDK: preferred latest version

Android device (emulator is not supported)

Minimum SDK version is 21

Biometric capture SDK structure

The SDK's structure is displayed below.

Tips

App size optimization

After adding the SDK to your project you will observe that the size of application has grown significantly. This is because the SDK now includes native libraries for two ABIs: armeabi-v7a and arm64-v8a. What is generated is an .apk file that deploys to Google Play. Your application will contain both application binary interfaces even if one is not used.

Android App Bundle is the solution for this issue. Instead of generating an .apk, it is possible to generate a bundle (.aab). When a user installs the application from the store that contains the bundle, only the required components for the user's specific device will be downloaded.

Additionally, the maximum size of the bundle increases to 150 MB (100 MB is still maximum size for .apk files).

No changes on Google Play are required - just upload .aab instead of .apk. Also, no development in the application project is required.

It is recommended that the bundle options be declared inside the Gradle file, for example:

XMLandroid { ... bundle { density { enableSplit true } abi { enableSplit true } language { enableSplit false } }}More about app bundles can be found here.

License manager

The purpose of this section is to show the API of the license management portion of the SDK, and expose the objects involved.

License manager

The License manager is the main entry point to use the SDK. You can manage licenses through LicenseManager.

Note: A valid license is required before using any feature of the SDK.

provideLicenseManager

This method provides an instance of LicenseManager with a predefined LKMS profile. Operation with LicenseManager should be executed before starting capture.

Kotlinval manager: LicenseManager = LicenseManager.provideLicenseManager(LkmsProfileId, LkmsApiKey, lkmsUrl)Activating license

This function takes care of making sure a valid license is stored on the device. This process is crucial and must occur each time before any SDK usage. In most cases it does not require any effort from integrator side. However, it might fail in some corner cases that are listed below.

Method handles license management on calling thread.

Callback solution:

Kotlinval activationResult = manager.activate(object : LicenseActivationListener { override fun onLicenseActivated() { //License fetched and activated with success. }

override fun onLicenseActivationFailed(licenseActivationError: LicenseActivationError) { //Failed to fetch or activate the license. } }, applicationContext)Coroutines solution: It returns LicenseActivationResult

Kotlinval activationResult = manager.activate(applicationContext)when (activationResult) { is LicenseActivationSuccess -> { //License fetched and activated with success. } is LicenseActivationError -> { //Failed to fetch or activate the license. }}LicenseActivationResult

This is information of result from activation license using coroutines solution. Instance might be type of:

LicenseActivationSuccess

LicenseActivationError

This is the information about why license can not be activated.

Attribute | Description |

|---|---|

type ActivationErrorType | The type of error why license activation failed |

message String | The activation failure reason. |

ActivationErrorType

Attribute | Description |

|---|---|

| Profile expired, all licenses won’t work anymore. (Contact with support) |

| No more licenses can be consumed. (Contact with support) |

| Credentials and/or profile information are wrong. |

| Connection issue. Make sure that your internet connection is stable. |

| Unknown issue. |

Getting started

This guide illustrates the required steps to configure a minimally viable project for capturing biometrics using the Biometric Capture SDK.

Downloadable sample apps are here:

Creating your app

Add the SDK library to your app's

build.gradle:

Groovyimplementation("morpho.mph_bio_sdk.android:SmartBio:version")If you do not have configured repository for the SDK yet, see introduction that explains how to do that.

Add the correct plugin dependency if you use face capture.

Plugins are special extensions to the SDK that might add or change its features. In this way, users can save memory and increase performance by picking plugins they need.

For face capture there are three plugins to choose from. There should be only one plugin selected during the build. If more than one for a specific flavor is selected, it will cause a MultipleFaceInitPluginsException.

Available plugins

plugin-face-normal should be used when WebBioServer is not used and there is need for strong security during local liveness challenges.

plugin-face-lite should be used when WebBioServer is used because it can reduce the size of an application significantly.

plugin-face-cr2dmatching should be used for local usage with additional security feature for

FaceLiveness.ACTIVE mode.

Example plugin dependency for face capture:

Groovyimplementation 'com.idemia.smartsdk:plugin-face-normal:version'Plugins for face matching

If you use the finger only variant you can skip this section because the proper plugin is already attached to that version.

For face matching there are three options to choose from. Keep in mind that these algorithms are not compatible.

Stored templates will not be successfully matched against templates from another algorithm.

plugin-algorithm-f5-4-low75: This has been improved to perform better with default compression. If a previous SDK has been used before and there is a user base with stored templates already, then full migration will be required. All templates must be generated again with the new plugin in use.

plugin-algorithm-f5-0-vid81: This is the default algorithm that is compatible with previous SDK versions.

plugin-algorithm-fingerv9: This provides only finger matching.

plugin-algorithm-f6-5-low70: Recommended algorithm for face matching, introduced in SDK version 4.44.0. There is no compatibility in the template level with other plugins.

Remember to attach only one matching plugin per flavor, otherwise a MultipleInitBlockPluginsException will occur.

Add the

CaptureViewto the layout where you handle the biometric capture:

XML<com.idemia.smartsdk.preview.CaptureView android:id="@+id/captureView" android:layout_width="match_parent" android:layout_height="match_parent" />On your activity or fragment get a reference to this view:

val cameraPreview: CaptureView = findViewById(android.R.id.captureView)Activate your license. This can be done in the

onCreate(Bundle savedInstanceState)or in a previous stage of your app. This must be done only once.

val manager = LicenseManager.provideLicenseManager(LkmsProfileId, LkmsApiKey, lkmsUrl)val activationResult = manager.activate(applicationContext)when (activationResult) { is LicenseActivationSuccess -> { //License fetched and activated with success. } is LicenseActivationError -> { //Failed to fetch or activate the license. }}For security reasons it is good to consider storing LKMS credentials outside source code (for example gradle properties).

Prepare capture settings. For face capture, you should use

FaceCaptureOptions.

val captureOptions = FaceCaptureOptions(FaceLiveness.PASSIVE)captureOptions.camera = Camera.FRONTcaptureOptions.captureTimeout = 120captureOptions.overlay = Overlay.OFFIn the

onResume()method of your activity or fragment, obtain a valid reference to theIBioCaptureHandlerusing the previously created capture options.

override fun onResume() { //Create handler BioSdk.createFaceCaptureHandler(this, captureOptions, object : MscAsyncCallbacks<IFaceCaptureHandler> { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: IFaceCaptureHandler) { // Indicates that initialization succeeded, the returned handler can be used to start the capture. faceCaptureHandler = result }

override fun onError(e: BioCaptureHandlerError) { // An error has occurred during the initialization } }) super.onResume()}Add the listeners for the events to the handler:

faceCaptureHandler.setFaceCaptureResultListener(object : FaceCaptureResultListener { override fun onCaptureSuccess(image: FaceImage) { //Successfully captured image }

override fun onCaptureFailure(captureError: CaptureError, biometricInfo: IBiometricInfo, extraInfo: Bundle) { //Capture failure }})

faceCaptureHandler.setFaceCaptureFeedbackListener(FaceCaptureFeedbackListener {feedback -> //Face capture feedback info, like move your face to the right})

faceCaptureHandler.setFaceTrackingListener(FaceCaptureTrackingListener { //Tracking info to know where the face is.})Initialize the preview and capture to start receiving events. It should happen after creating the capture handler. The most common place for this would be

onResume:

coroutineScope.launch { faceCaptureHandler.startPreview() faceCaptureHandler.startCapture()}Destroy the handler when

onPause()is invoked:

override fun onPause() { captureHandler?.apply { stopCapture() stopPreview() destroy() } super.onPause()}In your manifest, you must add:

XML<!--Declare new permissions--> <permission android:name="your.new.permission.NEW_READ_MPH_BIO_SDK_PROVIDER" android:protectionLevel="signature" /> <!--unless otherwise required, set the maximum security permission --> <permission android:name="your.new.permission.NEW_WRITE_MPH_BIO_SDK_PROVIDER" android:protectionLevel="signature" /> <!--unless otherwise required, set the maximum security permission -->XML<!--The provider must be defined by the implementing app so as to allow multiple apps--> <!--Bio store provider provider--> <provider android:name="com.morpho.mph_bio_sdk.android.sdk.content_provider.BioStoreProvider" android:authorities="your.new.authority" android:readPermission="your.new.permission.NEW_READ_MPH_BIO_SDK_PROVIDER" android:writePermission="your.new.permission.NEW_WRITE_MPH_BIO_SDK_PROVIDER" tools:replace="android:authorities, android:readPermission, android:writePermission"> </provider>Analytics

Capture SDK offers a logging mechanism that collects analytics data about SDK usage, and sends this data to IDEMIA's server. This data helps IDEMIA to improve Capture SDK and the likelihood of integrator success within the app. It is strongly recommended to activate the analytics mechanism.

You can enable or disable sending analytics data.

You can choose to send analytics data only when you are connected to a Wi-Fi network, so as not to not use your cellular connection.

Analytics data that IDEMIA collects contains only technical data.

No sensitive personal data is collected.

IDEMIA does not collect any images.

Analytics data that we collect include following information:

Application name, bundle id, version

Capture SDK and RemoteLogger libraries versions

Device model and operating system version

Technical information about performed face, finger, and document capture (such as: capture mode used; timestamp; reason of error; time needed to perform a capture; quality of captured image; and light condition)

Technical information about performed authentication and identification events (such as: used threshold, duration, and obtained score)

Other technical information (such as: image compression, occurred errors, and SDK performance) that does not contain personal data

You can disable analytics reporting using the appropriate SDK method.

Capture SDK plugins

Plugins have been introduced to give even more flexibility than variants of the SDK. Every integrator might have different needs and size requirements. A new plugin mechanism allows for greater flexibility. Plugins are split to two groups: feature and algorithm.

Feature plugins

Provides various SDK functionalities such as: face capture, document capture, and optical character recognition (OCR).

Algorithm plugins

Provides for extracting biometric data from images, matching this data, and storing it as templates.

How it works

Capture SDK still has previous variants with predefined plugins in the dependency list that are still required. However, some features might be different, like the matching algorithm or face capture challenge behavior. In such cases, these features might be configured via adding specific plugins.

All that must be done to add a plugin is to put the proper dependency to a project's module that will be using the plugin (How to use them).

Benefits

The obvious benefit is reducing the number of SDK variants which makes it easier to pick the proper SDK dependency. It also brings flexibility to the product, namely, the ability to mix or replace features in the future or even extend SDK possibilities by implementing your own plugins.

How to use them

Plugins are just ordinary dependencies. All that must be done is to add the proper dependency for the plugins that are needed. Read carefully about allowed combinations and predefined plugins in SDK variants.

Here is a snippet with all available plugins:

Gradle//Feature pluginsimplementation 'com.idemia.smartsdk:plugin-finger:$version'implementation 'com.idemia.smartsdk:plugin-face:$version'implementation 'com.idemia.smartsdk:plugin-face-normal:$version'implementation 'com.idemia.smartsdk:plugin-face-lite:$version'implementation 'com.idemia.smartsdk:plugin-face-cr2dmatching:$version'implementation 'com.idemia.smartsdk:plugin-face:$version'implementation 'com.idemia.smartsdk:plugin-improved-pdf417-detection:$version'

//Algorithm pluginsimplementation 'com.idemia.smartsdk:plugin-algorithm-f5-0-vid81:$version'implementation 'com.idemia.smartsdk:plugin-algorithm-f5-4-low75:$version'implementation 'com.idemia.smartsdk:plugin-algorithm-f6-0-idd80:$version'implementation 'com.idemia.smartsdk:plugin-algorithm-f6-5-low70:$version'implementation 'com.idemia.smartsdk:plugin-algorithm-fingerv9:$version'Allowed combinations

Here are all possible combinations of plugins for specific use cases.

As mentioned above, the SDK variants have predefined plugins dependency, so that only a few must be defined.

See what predefined plugins has which variant of the SDK you use.

Face capture |

|---|

plugin-face |

plugin-face-lite |

plugin-face-normal |

plugin-face-cr2dmatching |

Available algorithm plugins |

plugin-algorithm-f5-4-low75 |

plugin-algorithm-f5-0-vid81 |

plugin-algorithm-f6-5-low70 |

plugin-algorithm-f6-0-idd80 |

Finger capture |

|---|

plugin-finger |

Available algorithm plugins |

plugin-algorithm-f5-4-low75 |

plugin-algorithm-f5-0-vid81 |

plugin-algorithm-f6-0-idd80 |

plugin-algorithm-f6-5-low70 |

plugin-algorithm-fingerv9 |

Document capture |

|---|

plugin-improved-pdf417-detection |

Warning: Only one of: plugin-face-lite, plugin-face-normal, plugin-face-cr2dmatching can be used at a time. The integrator must pick one of them. A MultipleFaceInitPluginsException will occur if more than one has been picked.

SDK variants and their plugins

Each SDK plugin variant delivers something different - check carefully what each plugin variant contains. Plugin variants should not be added in a module that uses this specific variant. As can be seen below, no document-related plugins must be added for variants that deliver this feature. In other words, variants contain all plugins that are required and have no alternatives.

Capture SDK |

|---|

plugin-face |

plugin-finger |

Plugins that might be added for a Capture SDK variant:

One of: plugin-face-normal, plugin-face-lite, plugin-face-cr2dmatching

One of: plugin-algorithm-f5-4-low75, plugin-algorithm-f5-0-vid81, plugin-algorithm-f6-0-idd80, plugin-algorithm-f6-5-low70, plugin-algorithm-fingerv9* (this one is not recommended if face matching will be performed)

Biometric Capture SDK |

|---|

plugin-face |

plugin-finger |

Plugins that can be added for the Biometric Capture SDK variant:

One of: plugin-face-normal, plugin-face-lite, plugin-face-cr2dmatching

One of: plugin-algorithm-f5-4-low75, plugin-algorithm-f5-0-vid81, plugin-algorithm-f6-0-idd80, plugin-algorithm-f6-5-low70 plugin-algorithm-fingerv9 (this one is not recommended if face matching is going to be performed)

SmartFinger |

|---|

plugin-finger |

plugin-algorithm-fingerv9 |

There are no plugins for the SmartFinger variant.

SmartFace |

|---|

plugin-face |

Plugins that can be added for the SmartFace variant:

One of: plugin-face-normal, plugin-face-lite, plugin-face-cr2dmatching

One of: plugin-algorithm-f5-4-low75, plugin-algorithm-f5-0-vid81, plugin-algorithm-f6-0-idd80, plugin-algorithm-f6-5-low70

SmartFaceDoc |

|---|

plugin-face |

plugin-face-lite |

Plugins that can be added for the SmartFaceDoc variant:

One of: plugin-algorithm-f5-4-low75, plugin-algorithm-f5-0-vid81, plugin-algorithm-f6-0-idd80, plugin-algorithm-f6-5-low70

However, this variant is meant to be used with WebBioServer which performs matching operations (no need to do that locally).

For SDK variants with document plugin-improved-pdf417-detection may be added in order to improve capture of barcodes.

Feature plugins descriptions

plugin-face

Basic plugin needed for face capture. Usually it is predefined in every SDK variant that delivers face capture functionality.

plugin-face-normal

Should be used for face capture when WebBioServer is not used and there is a need for strong security during local liveness challenges.

plugin-face-lite

Should be used when WebBioServer is used for liveness check during face capture, because it can reduce the size of application significantly.

plugin-face-cr2dmatching

Should be used for local usage (without WebBioServer) when additional security feature for FaceLiveness.ACTIVE mode is needed.

plugin-finger

Plugin needed for finger capture. Usually it is predefined in every SDK variant that delivers finger capture functionality.

plugin-improved-pdf417-detection

Plugin that can be used to speed up barcode capture.

Algorithm plugins descriptions

plugin-algorithm-f6-0-idd80

It is more accurate than f5-4-low75 and much smaller than f5-0-vid81.

plugin-algorithm-f5-4-low75

Improved to perform better with default compression. If a previous SDK has been used before and there is a user base with stored templates already, then full migration of the user's biometrics will be required. All templates must be generated again with the new plugin in use.

plugin-algorithm-f5-0-vid81

This is the default algorithm that is compatible with previous SDK versions.

plugin-algorithm-f6-5-low70

Recommended algorithm for face capture. It is more accurate than f6-0-idd80. If a previous SDK has been used before and there is a user base with stored templates already, then full migration of the user's biometrics will be required. All templates must be generated again with the new plugin in use.

plugin-algorithm-fingerv9

Provides only finger matching feature. It is best to pick this one when only finger matching will be performed.

WARNING

The algorithms are NOT compatible with each other. The templates generated by one of the algorithms cannot be processed with the other one; that is, it is not possible to match a template generated with F6_0_IDD80 against a template generated with F5_4_LOW75 or F5_0_VID81. If an integrator wants to change the algorithm in their solution, all the stored templates must be recreated with the new algorithm.

SDK size

This is the estimated size of an SDK variant with all its dependencies, like predefined plugins (see Plugins section). The UI-extension is not included in size as it is not a predefined dependency.

SDK variant | Size |

|---|---|

CaptureFace | 24.05 MB |

CaptureDocument | 43.98 MB |

CaptureFinger | 17.02 MB |

CaptureBiometry | 28.52 MB |

CaptureBiometry_document | 48.86 MB |

CaptureFace_document | 44.39 MB |

Plugins size

Plugin | Size |

|---|---|

plugin-face | 7.59 KB |

plugin-face-normal | 6.75 MB |

plugin-face-lite | 4.79 MB |

plugin-face-cr2dmatching | 6.75 MB |

plugin-finger | 794.64 KB |

plugin-algorithm-f5-4-low75 | 12.30 MB |

plugin-algorithm-f5-0-vid81 | 4.08 MB |

plugin-algorithm-f6-5-low70 | 7.45 MB |

plugin-algorithm-fingerv9 | 1.51 KB |

plugin-improved-pdf417-detection | 8.81MB |

Integration guide

The purpose of this document is to show the API of the SDK and expose all of its involved objects.

Use cases

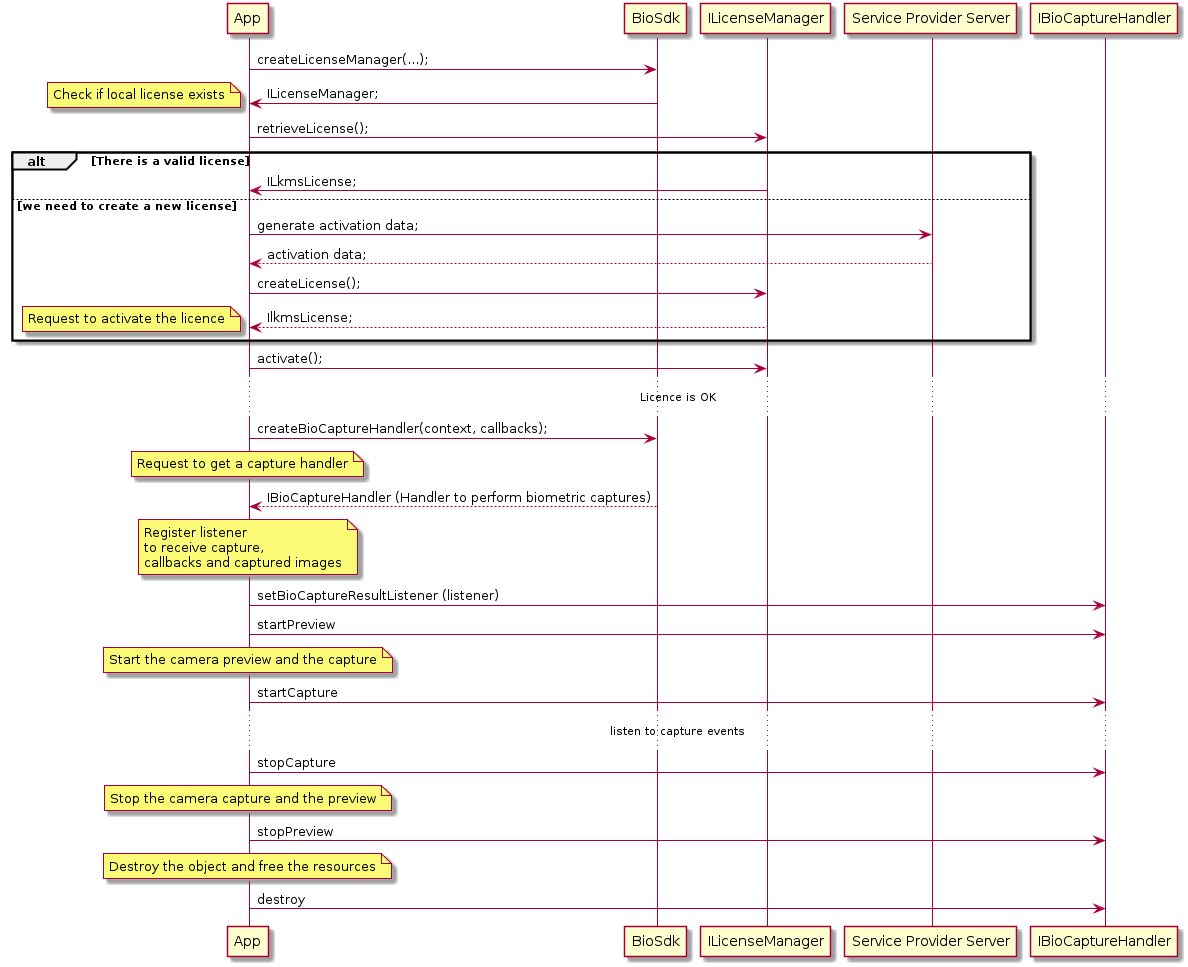

Capture biometrics

Below is the generic execution flow to perform a biometric capture (Get Picture), and get information about the biometry. For example, getting a picture and moving your head to the left.

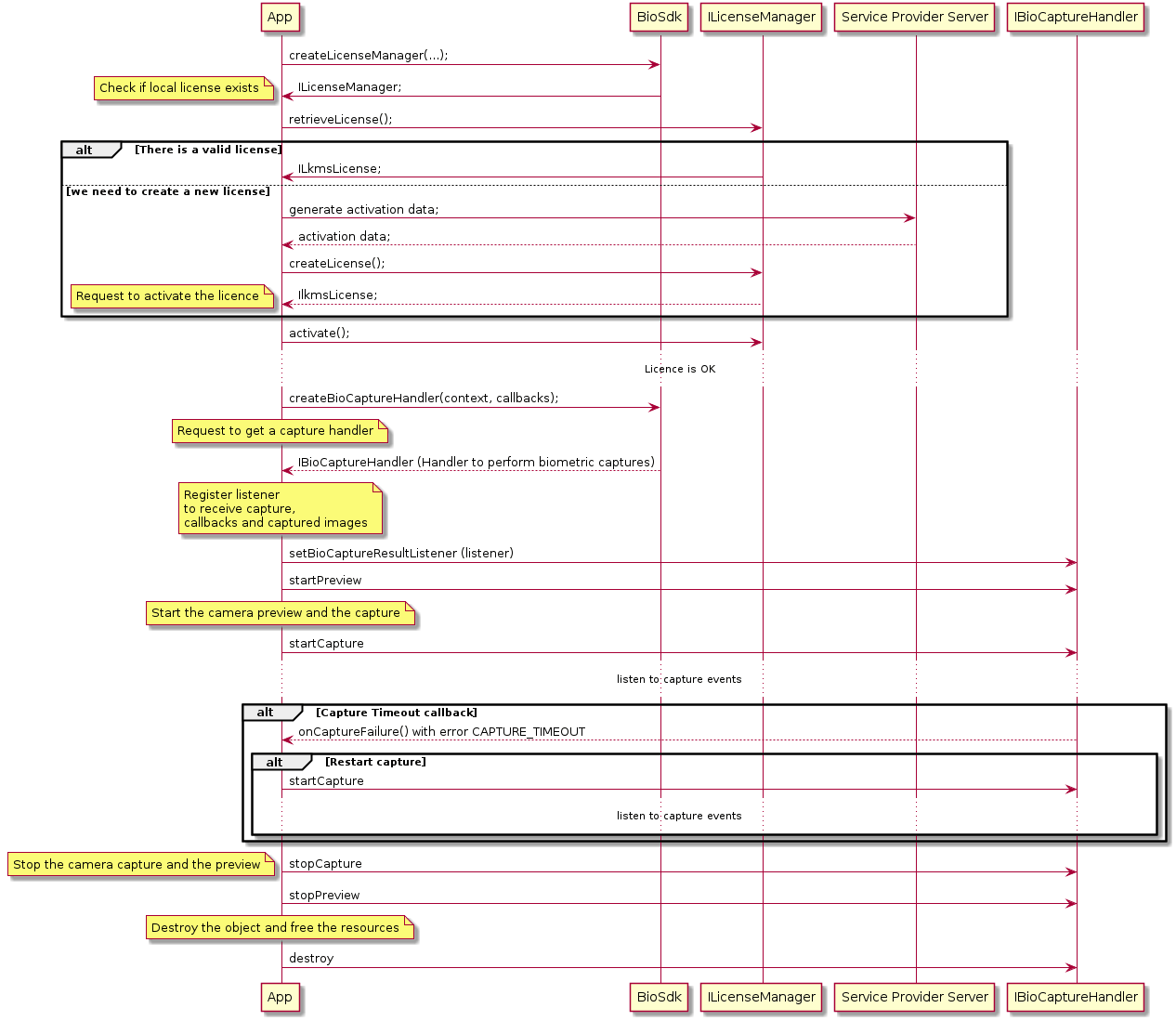

Capture timeout

Below is the generic execution flow to be followed when a capture timeout occurs.

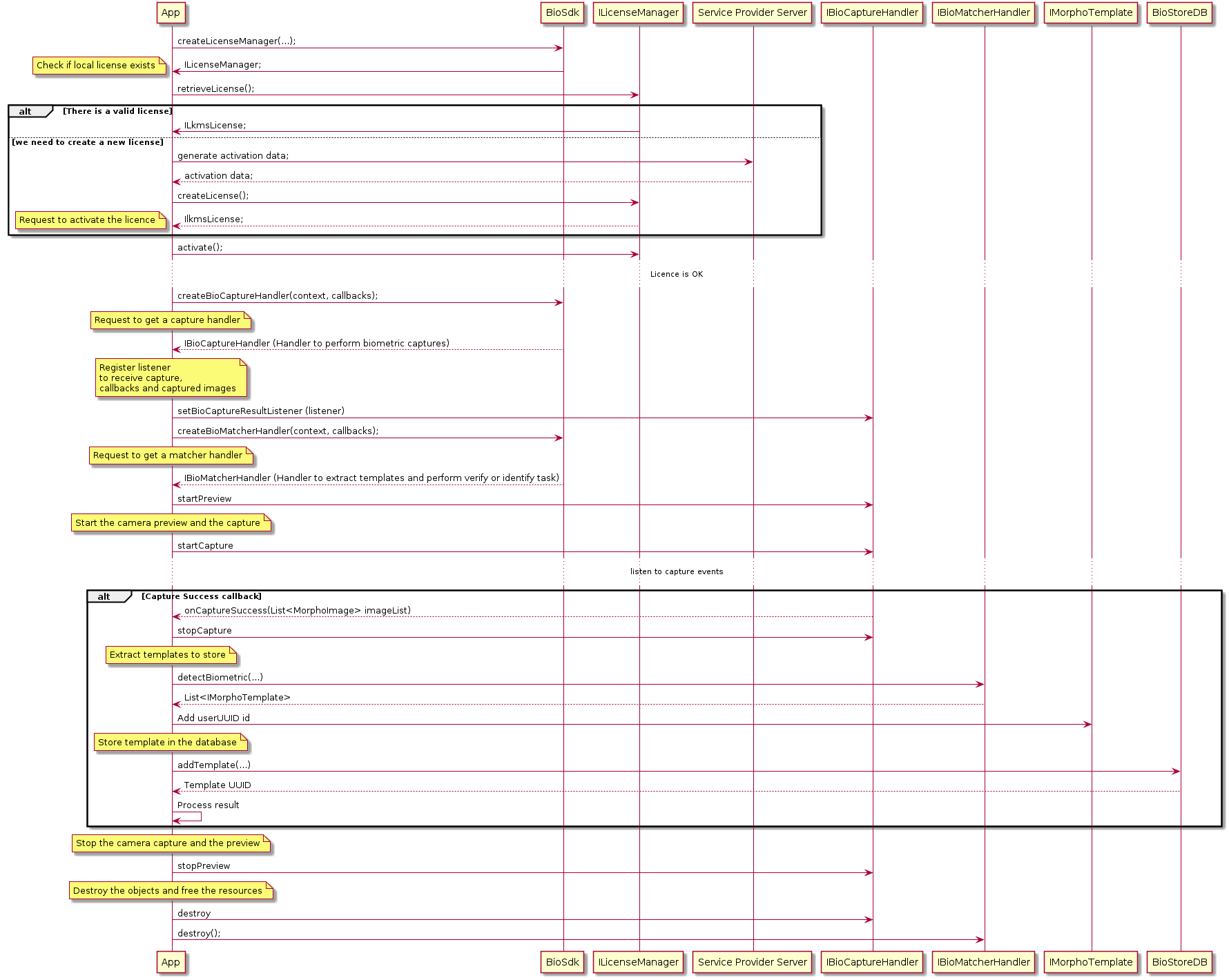

Capture enroll

Below is the generic execution flow to perform a biometric capture (Get Picture). After that, the biometrics template is extracted from the image returned by the capture component. The biometric template is linked to one user using the userUUID. The UUID of this template and the userUUID are stored in a database.

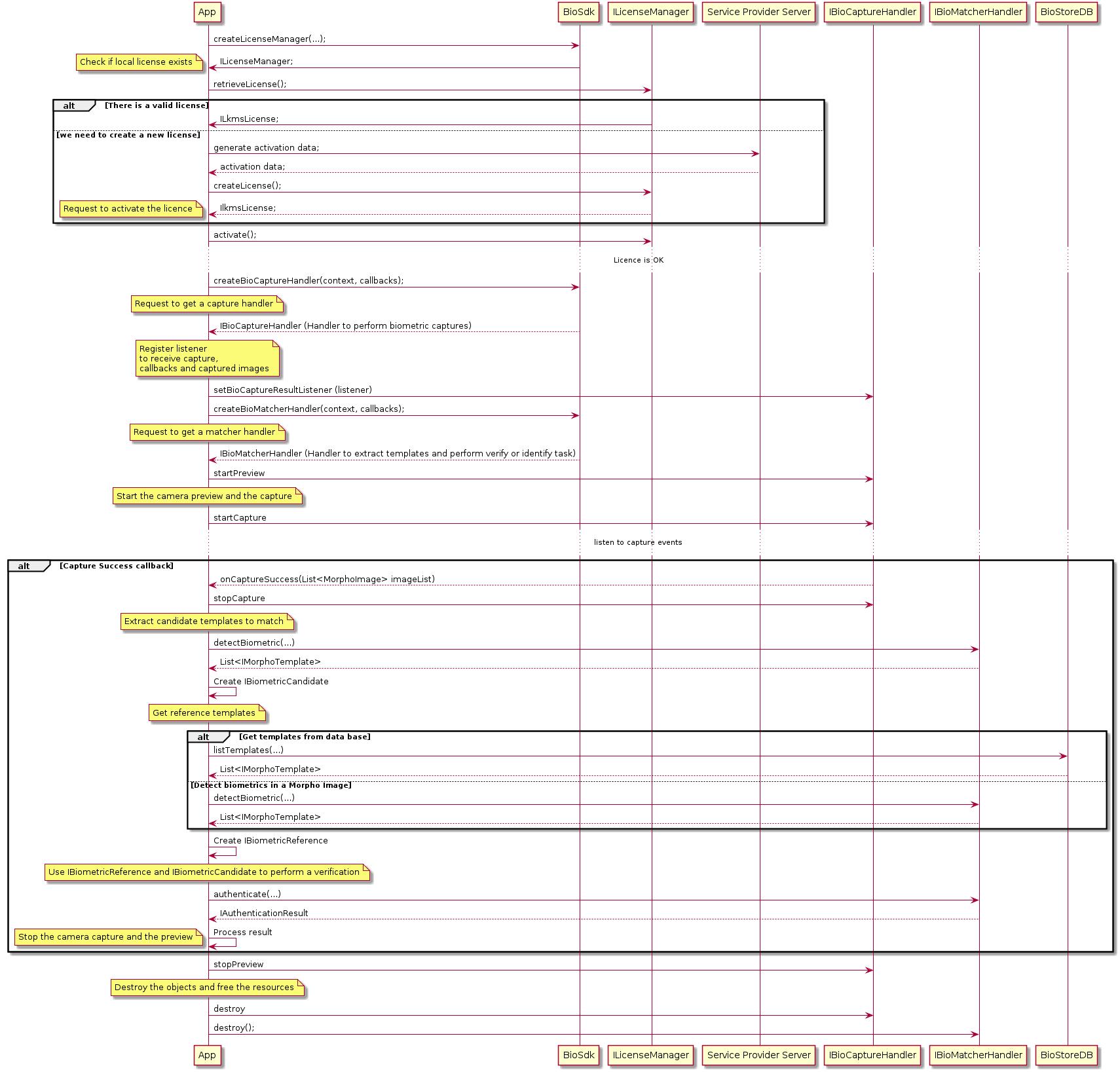

Capture authenticate

Below is the generic execution flow to perform a biometric capture (Get Picture). The biometrics template is then extracted from the image and returned by the capture component. These are the candidate templates that you must use to create an IBiometricCandidate.

After the IBiometricCandidate is created, a list of reference templates must be extracted. These will then be used to create an IBiometricReference object with which to match against the IBiometricCandidate and authenticate that the candidate templates belong to the user.

There are two ways to extract a list of template references: the first is to retrieve them from the database used during the enrollment process; the second is to extract the templates from another image with detectBiometrics(...).

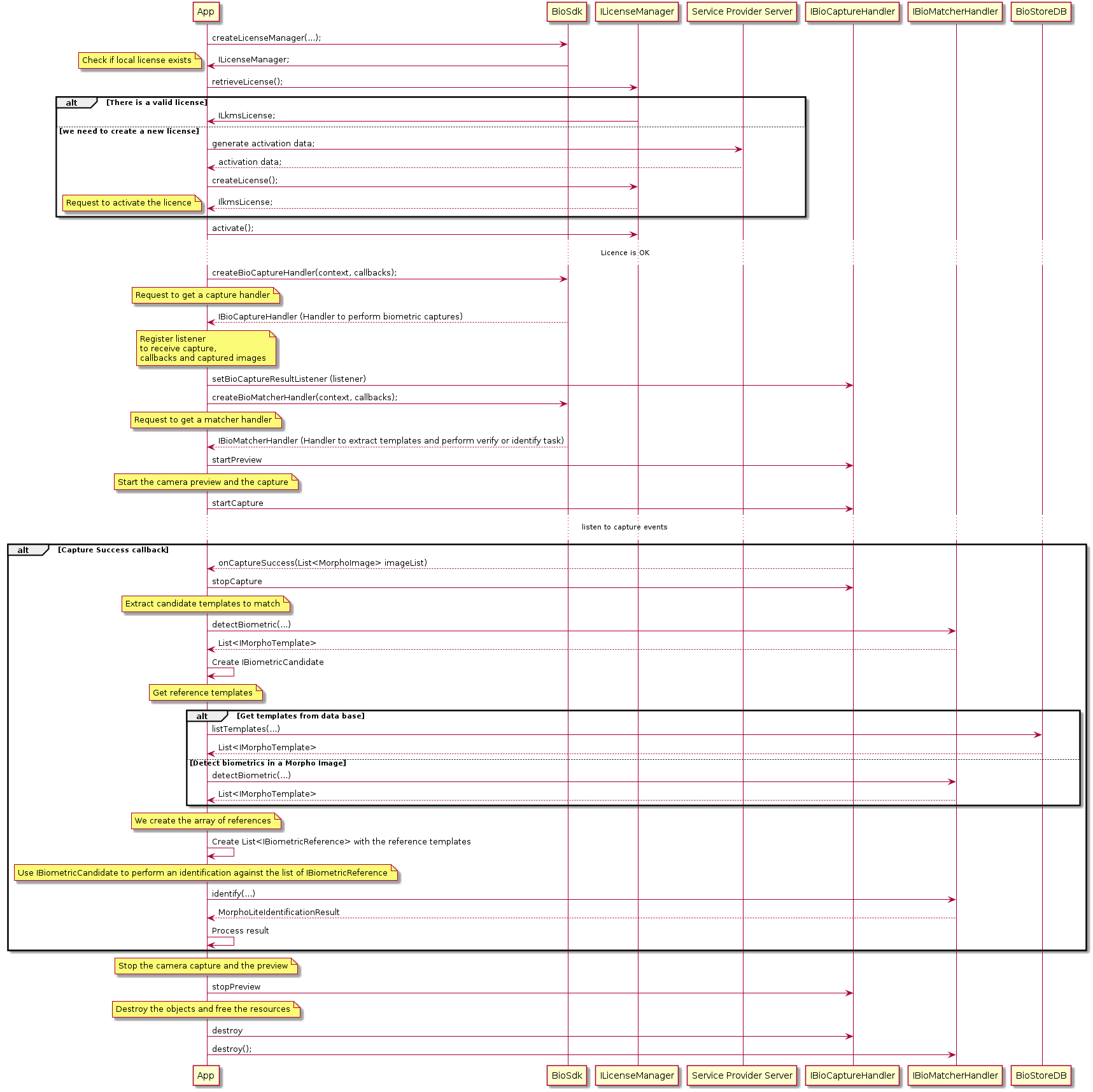

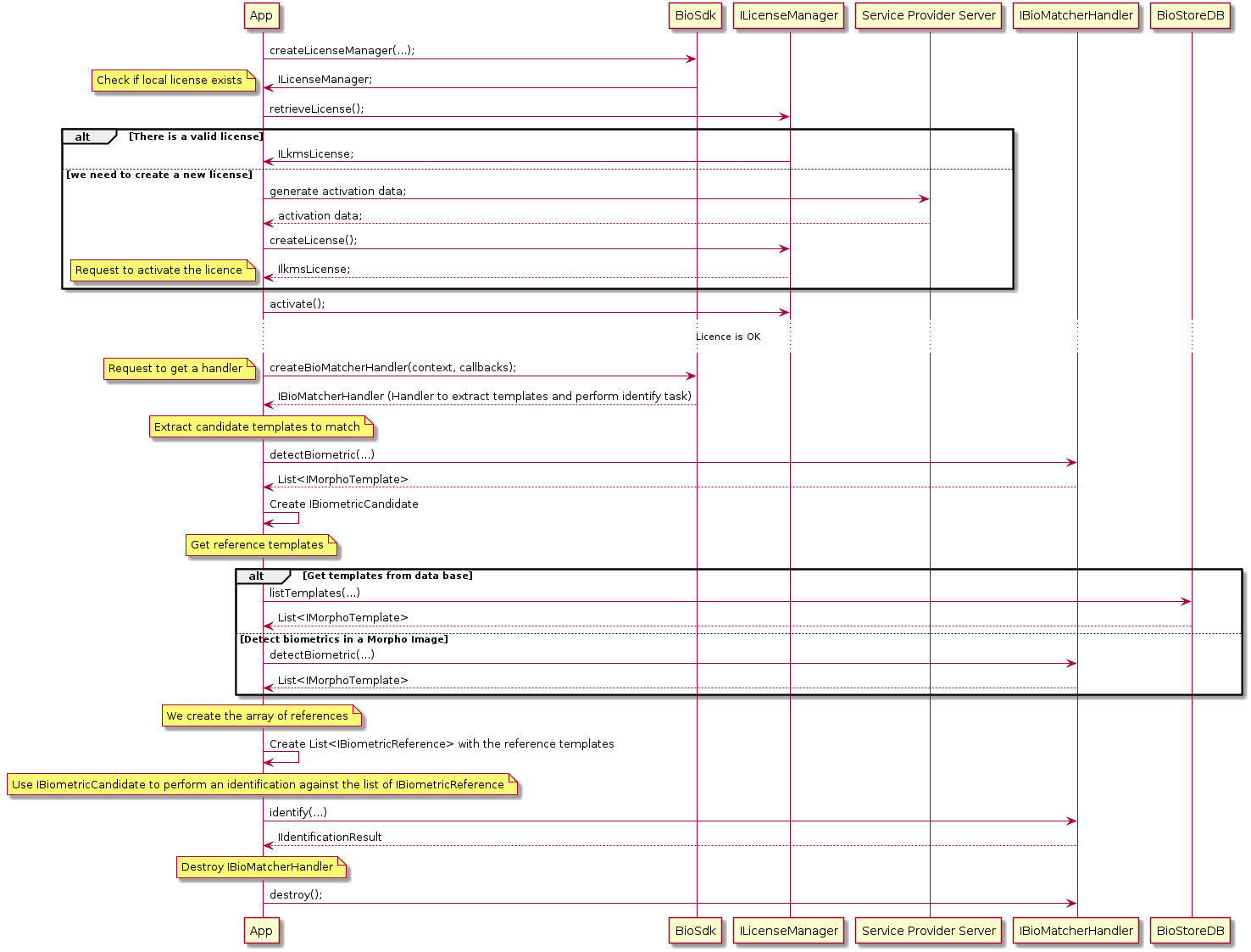

Capture identify

Below is the generic execution flow to perform a biometric capture (Get Picture). The biometrics template is then extracted from the image and returned by the capture component. These are the candidate templates which you must use to create an IBiometricCandidate.

After the IBiometricCandidate is created, a list of reference templates must be extracted. These will then be used to create an IBiometricReference object with which to match against the IBiometricCandidate and authenticate that the candidate templates belong to the user.

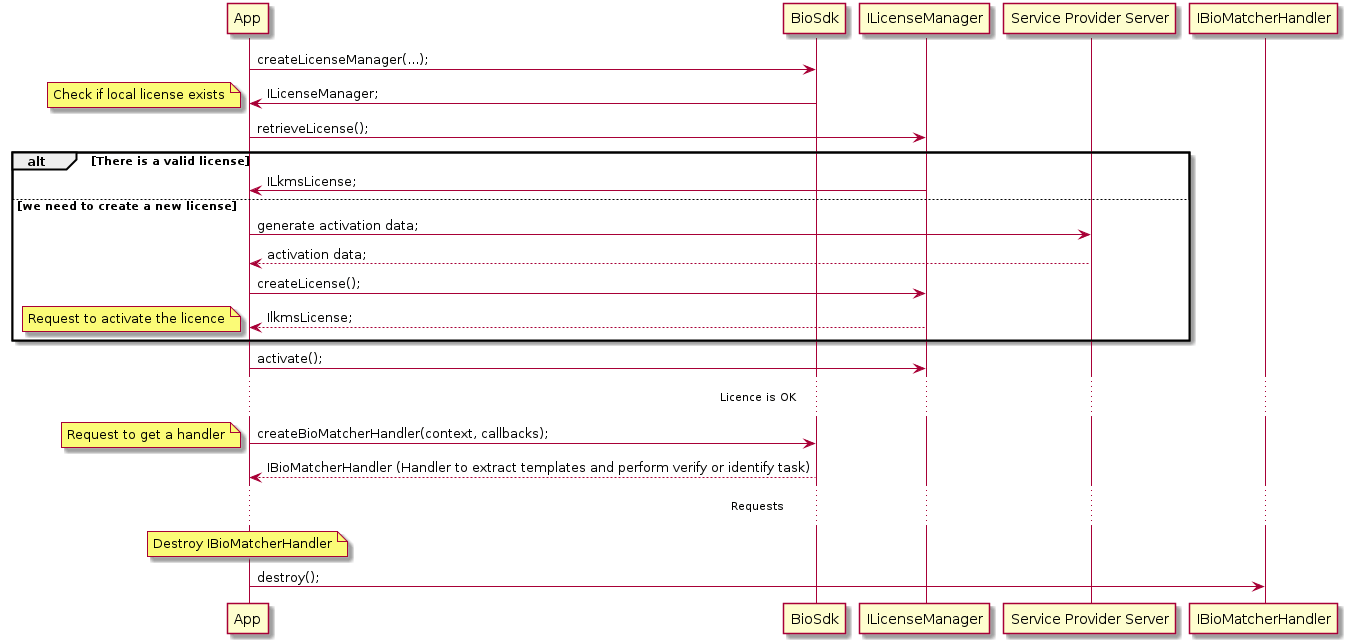

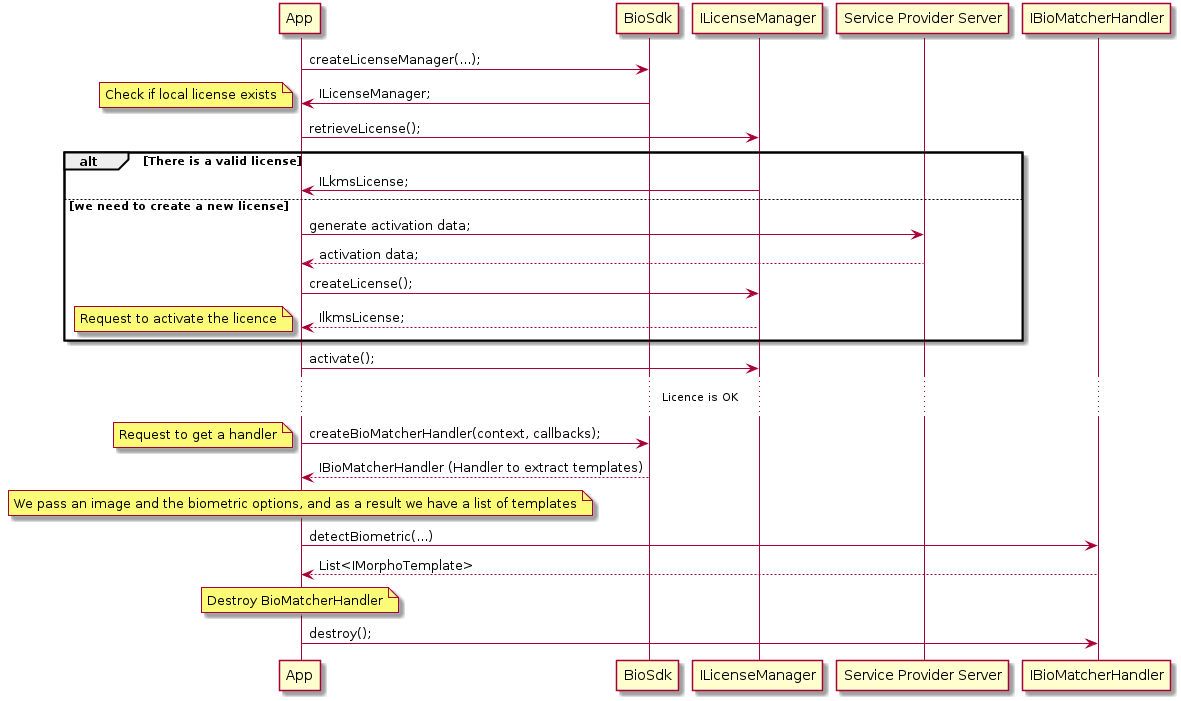

Creating BioMatcherHandler

Below is the generic execution flow to retrieve and release a BioMatcherhandler.

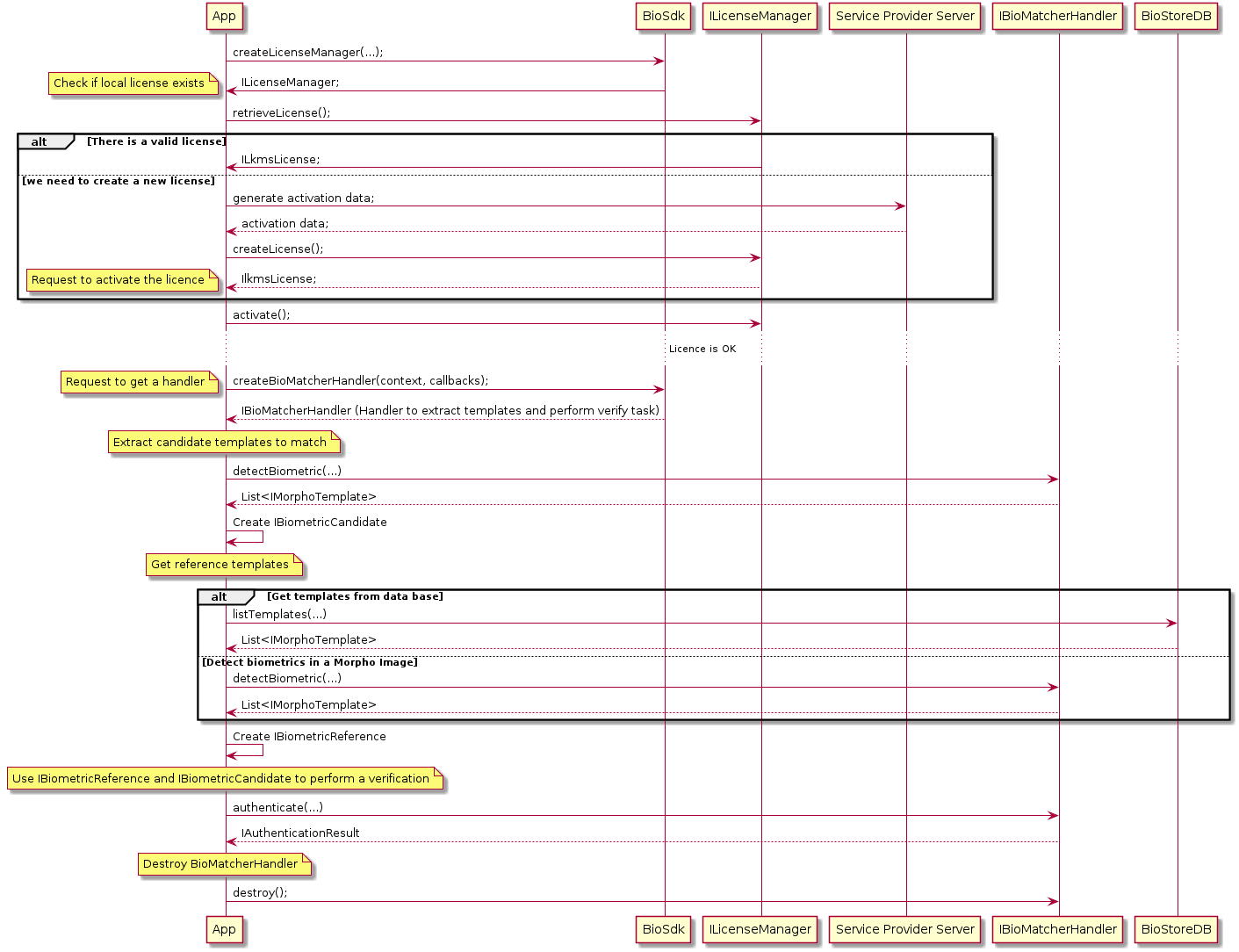

Authenticating

Below is the generic execution flow to perform a generic verification process which involves extracting the biometrics template from an image. These are the candidate templates which you must use to create an IBiometricCandidate.

After the IBiometricCandidate is created, a list of reference templates must be extracted. These will then be used to create an IBiometricReference object with which to match against the IBiometricCandidate and authenticate that the candidate templates belong to the user.

There are two ways to extract a list of template references: the first is to retrieve them from the database used during the enrollment process; the second to is extract the templates from another image with detectBiometrics(...).

Identifying

Below is the generic execution flow to perform a generic identification process which involves extracting the biometrics template from an image. These are the candidate templates which you must use to create an IBiometricCandidate.

After the IBiometricCandidate is created, a list of reference templates must be extracted. These will then be used to create an IBiometricReference object with which to match against the IBiometricCandidate and authenticate that the candidate templates belong to the user.

Detect biometrics

This describes detecting the biometrics in an IImage. This function is intended to be used to extract all the biometric templates contained in an image; for example, all the faces that are in an image.

Face capture - new API (recommended)

Introduction

In order to make integration of the SDK easier and more intuitive - new API for Face Capture has been delivered. It is based on use cases that are self-explaining which provide specific information depending on a given use case. This allows integrator to focus on working with the data provided by the SDK rather than on SDK configuration.

Old API is still available for backward compability for already integrated users. It's description might be found here.

NOTE: The new API now supports both: remote and local liveness use cases.

Integration

License activation

First step that is mandatory to use SDK is to activate license and grant camera permission for application. This part is common for old API and the new one. License handling can be found here.

Adding FaceCaptureView

FaceCaptureView is a key component of SDK. It not only provides preview for the capture but also it is an entry point to SDK's API. It means that on this component integrator sets up capture and orchestrate it's flow.

FaceCaptureView should be added to the layout of capture Activity. It is done as any other android's view. It must be visible to the end user.

XML<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" android:layout_width="match_parent" android:layout_height="match_parent" android:orientation="horizontal">

<com.idemia.capture.face.api.FaceCaptureView android:id="@+id/captureView" android:layout_width="0dp" android:layout_height="0dp" app:layout_constraintBottom_toBottomOf="parent" app:layout_constraintEnd_toEndOf="parent" app:layout_constraintStart_toStartOf="parent" app:layout_constraintTop_toTopOf="parent" />

</androidx.constraintlayout.widget.ConstraintLayout>As FaceCaptureView is also entry point to SDK, it should be also invoked with proper methods in application logic. This can be done by old way - findViewById:

Kotlinprivate var captureView: FingerCaptureView? = null

override fun onCreate(savedInstanceState: Bundle?) { super.onCreate(savedInstanceState) setContentView(R.layout.your_activity_layout)

captureView = findViewById(R.id.captureView)}or with more popular way - through binding:

Kotlinlateinit var binding: YourActivityCaptureBinding

override fun onCreate(savedInstanceState: Bundle?) { super.onCreate(savedInstanceState) binding = YourActivityCaptureBinding.inflate(layoutInflater) setContentView(binding.root)

val captureView = binding.captureView}Creating use case

In order to perform capture, the next step is to create use case we are interested in. As mentioned above - new API focuses on what we want to do and not on how we want to do. In order to achieve that, use cases have been introduced. They define what will be done and require (at least for result) set of listeners to provide information about capture.

Kotlinval remoteUseCase = RemoteUseCase( sessionId, RemoteCaptureListeners( faceTrackingInfo = faceTrackingInfoListener, captureFeedback = feedbackListener, captureLivenessListener = captureLivenessListener, stepInfoListener = stepInfoListener, passiveVideoListener = passiveVideoListener, captureResultListener = captureResultListener ), environmentInfo )More about use cases and their properties can be found in dedicated section.

Setting up capture

When license is activated, camera permission is granted, and use case has been created, it is time to set up capture and perform it. In order to do that use method setUp on FaceCaptureView:

Kotlinfun setUp(useCase: UseCase, lifecycle: Lifecycle?, uiSettings: UISettings?)Please find below explanation to each function argument:

Parameter | Description |

|---|---|

useCase UseCase | This is use case instance providing type of capture and allowing integrator to get data from it |

lifecycle Lifecycle | This is Android's component allowing SDK be lifecycle aware. Argument is optional. If not provided integrator has to explicitly manage flow. If lifecycle is provided there is no need to start/cancel/destroy flow. |

uiSettings UISettings | Settings providing details to UI-Extensions library. If not provided integrator has to handle displaying proper UI to end user on his own. More information about it can be found here. |

In case that Lifecycle component is not configured, methods:

start() - Start's capture and liveness verification flow. Recommended to invoke in onResume or onStart methods of Android's lifecycle.

cancel() - Cancels flow. Recommended to invoke in onPause or onStop methods of Android's lifecycle - depending on desired effect.

destroy() - Cleans up capture view and it's data. Recommended to invoke in onDestroy method of Android's lifecycle. have to be called explicitly by integrator in order to provide smooth and stable user experience.

Provided that above steps has been done, capture needs to be set up, as in the following example:

Kotlin[code in Activity]

fun setupView(useCase: UseCase) { binding.captureView.setUp( useCase, lifecycle, UISettings(passiveVideoSettings, passiveSettings, joinThePointsCaptureSettings) )}Here is example of UISettings setup. Keep in mind that this class keep configuration for each FaceLiveness mode except from NO_LIVENESS:

Kotlinval joinThePointsCaptureSettings = joinThePointsChallengeSettings { useInterpolation = true scene { overlay { showOverlay = true imageRes = R.drawable.ic_face_overlay marginVertical = R.dimen.default_face_overlay_vertical_padding marginHorizontal = R.dimen.default_face_overlay_vertical_padding text { text = R.string.default_overlay_text textSize = R.dimen.default_overlay_text_size textColor = Color.parseColor(Colors.text_black) } } capturedLineOpacity = 0.5f pointer { type = PointerType.PULSING collisionWithTargetAction = PointerCollisionAction.NONE } target { pulseAnimation { waves = 3 } showMarkOnCurrentTarget = true } verticalTilt { enabled = false } tapping { enabled = false } result { failureImageResId = R.drawable.ic_challenge_failed successImageResId = R.drawable.ic_challenge_success } }}

val passiveSettings = passiveCaptureSettings { scene { background { colorEnd = Color.parseColor("#189482") colorStart = Color.parseColor("#38ddb8") } previewScale { scaleX = 1.0f scaleY = 1.0f } feedback { colorText = Color.parseColor(Colors.white) } overlay { showOverlay = true } tapping { colorBackground = Color.parseColor("#FAFAFA") colorImage = Color.parseColor(Colors.black) colorText = Color.parseColor(Colors.black) textResId = "Use your head to interact" textH1ResId = "No tapping needed" enabled = true } verticalTilt { colorBackground = Color.parseColor("#FAFAFA") colorImage = Color.parseColor("#000000") colorText = Color.parseColor("#000000") textResId = "Please hold your phone vertically." enabled = true } countdown { countdownSeconds = 3 } delay { isEnabled = true message = "Authentication locked.\nPlease wait for:\n%1$s" } }}

val passiveVideoSettings = passiveVideoCaptureSettings { scene { preparationScene { backgroundColor = Color.WHITE } faceOverlay { progressBar { progressFill = Color.GREEN } } background { colorEnd = Color.parseColor("#189482") colorStart = Color.parseColor("#38ddb8") } previewScale { scaleX = 1.0f scaleY = 1.0f } feedback { videoBackground { } } tapping { colorBackground = Color.parseColor("#FAFAFA") colorImage = Color.parseColor("#000000") colorText = Color.parseColor("#000000") textResId = "Use your head to interact" textH1ResId = "No tapping needed" enabled = true } verticalTilt { colorBackground = Color.parseColor("#FAFAFA") colorImage = Color.parseColor("#000000") colorText = Color.parseColor("#000000") textResId = "Please hold your phone vertically." enabled = true } delay { isEnabled = true message = "Authentication locked.\nPlease wait for:\n%1$s" } }}Use cases

As mentioned in sections above new API is meant to be easier to integrate and more intuitive in general. In order to achieve that use cases have been introduced. Every use case is dedicated to do particular job. Please find below list of available use cases.

RemoteUseCase (recommended)

This use case is used to perform face capture with backend liveness verification. Thanks to this use case integrator does not have to integrate with backend services as it provides end to end integration. However, few things needs to be provided:

Session id for given capture

RemoteCaptureListeners

EnvironmentInfo

KotlinRemoteUseCase(sessionId: String, listeners: RemoteCaptureListeners, environmentInfo: EnvironmentInfo)Parameter | Description |

|---|---|

sessionId String | Session id correlated with face capture. Most popular approach is to create session outside of application (integrator's backend) and pass it to it. Can be created via backend components: GIPS or directly via WebBio. Please find more instructions according to session creation below. |

listeners RemoteCaptureListeners | Group of listeners related to remote use case. They help to gather capture data and informs about flow state and result. See listeners section for more details. |

environmentInfo EnvironmentInfo | Informations about Proofing Platform environment and authentication method. |

RemoteCaptureListeners - detailed description of each listeners can be found in listeners section.

Parameter | Description |

|---|---|

livenessActiveListener LivenessActiveListener | Provides information about liveness ACTIVE mode. Usefull when UISettings are not provided to FaceCaptureView. |

faceTrackingInfo FaceTrackingInfoListener | Provides coordinates with face. |

captureFeedback CaptureFeedbackListener | Provides feedback that should be presented to end user to improve capture process. Handled when UISettings are used. |

captureLivenessListener CaptureLivenessListener | Provides information about liveness mode for current capture. |

stepInfoListener StepInfoListener | Provides information about state of capture. |

passiveVideoListener PassiveVideoListener | Provides information about liveness PASSIVE_VIDEO mode. Usefull when UISettings are not provided to FaceCaptureView. |

captureResultListener RemoteCaptureResultListener | Provides information about result of whole flow. |

livenessProcessingListener LivenessProcessingListener | Provides progress of sending user image metadata to the server. It takes values from 0.0 to 1.0 |

EnvironmentInfo

FaceCapture is compatible with two types of the authorization: API Key and OAuth.

In token type authorization, an access token is generated by the authorization server using the provided secrets. This token can be utilized by creating an

AccessTokenclass and using the appropriate initializer in theEnvironmentInfoclass:init(accessToken: AccessToken, baseUrl: URL).The

AccessTokenclass holds information about the secret and token type from the OAuth authorization server.

Secrets can be found on the webpage: https://experience.idemia.com/dashboard/my-identity-proofing/access/environments/ .

Access Token solution constructor:

Parameter | Description |

|---|---|

baseUrl String | URL to Proofing services. For example production URL is: |

accessToken AccessToken | Dedicated information about access token used to authenticate on Proofing services. Do not share this |

and try to avoid storing it on application's repository |

ApiKey solution constructor:

Parameter | Description |

|---|---|

baseUrl String | URL to Proofing services. For example production URL is: https://proofing.app.eu.identity-prod.idemia.io:443/ |

apiKey String | Dedicated key used to authenticate on Proofing services. Do not share this and try to avoid storing it on application's repository |

AccessToken

Parameter | Description |

|---|---|

secret String | Dedicated token used to authenticate on Proofing services. Do not share this and try to avoid storing it on |

application's repository | |

tokenType String | Token type to indicate how it should be used in authorization request. |

Creating capture session

RemoteUseCase handles liveness verification on backend side. It requires to create session per capture. Common approach is to create session on integrator's backend side and provide it to application. Then capture flow might be triggered. Good to start with pages:

General description of liveness remote capture - here

GIPS API description - here

WebBio API description - here

For example, creating session via GIPS requires:

Create identity by calling:

POST: /v1/identitiesAs a result an identity is being returnedSubmit confirmation that the user has consented to perform specific evidence verifications:

POST: /v1/identities/{id}/consentsStart liveness session by calling:

POST: /v1/identities/{id}/attributes/portrait/live-capture-session?mode=nativeSDKResponse to this call contains session id used by SDK.

Proceeding with WebBio requires:

Create session by calling:

POST: /bioserver-app/v2/bio-sessionsCall must contain session data in body.Retrieve session path from the response:

Kotlinval bioSessionPath = response.headers()["Location"]Get BioSession:

GET: /bioserver-app{bioSessionPath}Response to this call contains session id used by SDK.Initialize the session with id from previous step and liveness parameters passed in body:

POST: /bioserver-app/v2/bio-sessions/{bioSessionId}/init-liveness-parameters

Snippet below shows use case creation:

Kotlinval environmentInfo = EnvironmentInfo( "https://proofing.app.eu.identity-prod.idemia.io:443/", "YourApiKey")val sessionInfo = sessionHandler.createSession( readFaceLivenessModeFromSettings(), readFaceSecurityLevelFromSettings())val remoteUseCase = RemoteUseCase( sessionInfo.sessionId, RemoteCaptureListeners( faceTrackingInfo = faceTrackingInfoLoggingListener, captureFeedback = feedbackListener, captureLivenessListener = captureLivenessLoggingListener, stepInfoListener = stepInfoListener, passiveVideoListener = passiveVideoLoggingListener, captureResultListener = captureResultListener ), environmentInfo)If this guide is not enough, there is still FaceSampleAppLite source code on our Artifactory repository. Feel free to download latest package with GIPS implementation from here or WBS implementation from here and see integration with session creation included.

Keep in mind that result of flow on SDK side is sending required data to backend service. Captured image might be required for application needs. To aquire it, additional request to WebBioServer has to be done. API description related to this can be found here.

Simple interface responsible for starting illumination process. It's returned by onIlluminationDemand method of MlcListener.

Listeners

New API introduces multiple listeners to acquire capture related data by integrator. All listeners are being called on UI thread and it is safe to manipulate UI components directly from them.

CaptureLivenessListener

Returns information about current liveness capture mode. Usefull for RemoteUseCase where mode comes from backend side.

Kotlinfun captureLiveness(liveness: Liveness)Liveness

Value | Description |

|---|---|

ACTIVE | Means that current liveness mode is active one. User need to connect points using face. |

PASSIVE | Means that current liveness mode is passive one. No challenges for user. |

PASSIVE_VIDEO | More advanced variant of passive mode. It requires backend integration (can be used with RemoteUseCase) as it uses more restrictive liveness algorithms. |

StepInfoListener

This listener provides information about capture flow state within StepInfo object.

Kotlinfun stepInfo(stepInfo: StepInfo)StepInfo

Value | Description |

|---|---|

PREPARING_LIVENESS | Liveness challenge is being prepared. |

CAPTURE_STARTED | Capture has been started. Preview should actively show frames from camera. |

LivenessActiveListener

This listener provides information about active face capture. This mode requires user to connect dots in correct order by moving his face. Callbacks tells integrator what is current status of challenge and what to display. Keep in mind that by providing UISettings to FaceCaptureView, there is no need to handle that because SDK will draw this challenge with style provided.

Kotlinfun onPointerUpdate(pointInfo: PointerInfo)PointerInfo contains information about user's "viewfinder" position. This is the point that user needs to put on a target in order to mark it as "captured".

Kotlinfun onTargetUpdate(targetInfo: TargetInfo)TargetInfo contains information about targets to capture.

Parameter | Description |

|---|---|

x Int | X coordinate of target. |

y Int | Y coordinate of target. |

show Boolean | Indicates if target should be visible to user. |

radius Int | Radius of target relative to capture frame size. |

number Int | Number of target. |

completness Float | Value ranged from 0.0 to 1.0, where 1.0 tells that target is fully captured. |

current Boolean | Informs if given target is currently active one (to be captured). |

Kotlinfun onNumberTargets(numberOfTargets: Int)This callback provides information about how many points needs to be captured to pass challenge.

PassiveVideoListener

This listeners helps to pass passive video liveness capture mode. Before capture starts there is a preparation phase and capture itself has it's progress. Information are provided within this listener should be presented to end user. Keep in mind that by providing UISettings to * FaceCaptureView*, there is no need to handle this listener because SDK will draw this challenge with style provided.

Kotlinfun onPreparationStarted()Tells integrator that preparation of capture has been started.

Kotlinfun onPreparationFinished()Tells integrator that preparation phase has finished. Now capture will be performed.

Kotlinfun overlayUpdated(overlay: OvalOverlay)To make capture easier there is a special configuration for UI oval to be displayed to end user. OvalOverlay has coordinates and size of that oval.

Kotlinfun progressUpdated(progress: Float)Progress of capture.

CaptureResultListener

Used for all use cases. Provides information about flow result.

Kotlinfun onFinish(result: CaptureResult)CaptureResult instance might be type of Success that is information about successful flow or Failure that contains Error instance inside. For more details see errors section.

CaptureFeedbackListener

This is listener with crucial information for user about the capture. Helps to find optimal position in front of camera.

Kotlinfun onFeedback(captureFeedback: CaptureFeedback)Where CaptureFeedback is enum with self-explanatory instruction. It is covered by UISettings* by mapping these to text instructions to the user.

FaceTrackingInfoListener

This listener provides rectangle coordinates and size with face position (relative to preview frame size) in real time during capture. Helpful when there is a need to draw overlay on preview showing detected face.

Kotlinfun faceTrackingInfo(trackingInfo: FaceTrackingInfo)FaceTrackingInfo provides "face rectangle" informations.

LivenessProcessingListener

This listener provides information about current progress of uploading metadata to the server.

Kotlinfun onLivenessMetadataUploadProgressUpdated(@FloatRange(from = 0.0, to = 1.0) progress: Float)MlcListener

This listener provides information related to MultidimensionalLivenessCheck capture.

It contains following methods:

Kotlinfun onSmileStabilityChange(@FloatRange(from = 0.0, to = 1.0) value: Float)Returns the percentage of smile step completion.

Kotlinfun onSmileSizeChange(@FloatRange(from = 0.0, to = 1.0) value: Float)Returns current smile size.

Kotlinfun onSmileFinished()Called when smile acquisition process has ended.

Kotlinfun onIlluminationPrepared(@FloatRange(from = 0.0, to = 1.0) scale: Float)Indicates that illumination process is ready to start. scale parameter is a value between 0.0 and 1.0, and should be used to rescale preview component before triggering illumination.

Kotlinfun onIlluminationProgressChange(@FloatRange(from = 0.0, to = 1.0) progress: Float)Returns current illumination progress value in the range between 0.0 and 1.0.

Kotlinfun onColorToDisplay(red: Int, green: Int, blue: Int)Returns colors which should be used as a part of illumination process. Background of preview should change according to those colors.

Kotlinfun onIlluminationFinished()Called after end of illumination process.

Kotlinfun onIlluminationDemand(request: IlluminationRequest)Returns IlluminationRequest which is used to start illumination.

Additional classes

IlluminationRequest

Kotlininterface IlluminationRequest { fun start()}ActiveLivenessUseCase

This use case performs face capture with on-device liveness verification. It means that no external requests are made in order to verify user. "Active" in use case name indicate that in order to pass verification end user has to complete an action. Points on the screen need to be joined in given order using face as a pointer.

Use case should be created using its constructor:

KotlinActiveLivenessUseCase ( val listeners: ActiveCaptureListeners = ActiveCaptureListeners(), val numberOfPoints: Int, val timeoutInSeconds: Long = DEFAULT_TIMEOUT_SECONDS, val securityLevel: SecurityLevel = SecurityLevel.HIGH,)Parameter | Description |

|---|---|

listeners ActiveCaptureListeners | Aggregated listeners used during this use case. See listeners section for more details. |

numberOfPoints: Int | This parameter describes amount of points to be connected during the capture. |

timeoutInSeconds Long | After given amount of seconds, capture finishes with timeour error. Default value is: 120 |

securityLevel SecurityLevel | This parameter determines how restrictive liveness alghoritms are. Default value is: SecurityLevel.HIGH |

Listeners

LivenessActiveListener

This listener provides information about active face capture. This mode requires user to connect dots in correct order by moving his face. Callbacks tells integrator what is current status of challenge and what to display. Keep in mind that by providing UISettings to FaceCaptureView, there is no need to handle that because SDK will draw this challenge with style provided.

Kotlinfun onPointerUpdate(pointInfo: PointerInfo)PointerInfo contains information about user's "viewfinder" position. This is the point that user needs to put on a target in order to mark it as "captured".

Kotlinfun onTargetUpdate(targetInfo: TargetInfo)TargetInfo contains information about targets to capture.

Parameter | Description |

|---|---|

x Int | X coordinate of target. |

y Int | Y coordinate of target. |

show Boolean | Indicates if target should be visible to user. |

radius Int | Radius of target relative to capture frame size. |

number Int | Number of target. |

completness Float | Value ranged from 0.0 to 1.0, where 1.0 tells that target is fully captured. |

current Boolean | Informs if given target is currently active one (to be captured). |

Kotlinfun onNumberTargets(numberOfTargets: Int)This callback provides information about how many points needs to be captured to pass challenge.

CaptureResultListener

Provides information about flow result.

Kotlinfun onFinish(result: CaptureResult)CaptureResult instance might be type of Success that is information about successful flow or Failure that contains Error instance inside. For more details see errors section.

FaceTrackingInfoListener

This listener provides rectangle coordinates and size with face position (relative to preview frame size) in real time during capture. Helpful when there is a need to draw overlay on preview showing detected face.

Kotlinfun faceTrackingInfo(trackingInfo: FaceTrackingInfo)CaptureFeedbackListener

This is listener with crucial information for user about the capture. Helps to find optimal position in front of camera.

Kotlinfun onFeedback(captureFeedback: CaptureFeedback)Where CaptureFeedback is enum with self-explanatory instruction. It is covered by UISettings* by mapping these to text instructions to the user.

PassiveLivenessUseCase

This use case performs face capture with on-device liveness verification. It means that no external requests are made in order to verify user. "Passive" in use case name indicate that in order to pass verification end user does not have to do any action - just place his face in front of the camera.

Use case should be created using its constructor:

KotlinPassiveLivenessUseCase ( val listeners: ActiveCaptureListeners = ActiveCaptureListeners(), val timeoutInSeconds: Long = DEFAULT_TIMEOUT_SECONDS, val securityLevel: SecurityLevel = SecurityLevel.HIGH,)Parameter | Description |

|---|---|

listeners ActiveCaptureListeners | Aggregated listeners used during this use case. See listeners section for more details. |

timeoutInSeconds Long | After given amount of seconds, capture finishes with timeour error. Default value is: 120 |

securityLevel SecurityLevel | This parameter determines how restrictive liveness alghoritms are. Default value is: SecurityLevel.HIGH |

Listeners

CaptureResultListener

Provides information about flow result.

Kotlinfun onFinish(result: CaptureResult)CaptureResult instance might be type of Success that is information about successful flow or Failure that contains Error instance inside. For more details see errors section.

FaceTrackingInfoListener

This listener provides rectangle coordinates and size with face position (relative to preview frame size) in real time during capture. Helpful when there is a need to draw overlay on preview showing detected face.

Kotlinfun faceTrackingInfo(trackingInfo: FaceTrackingInfo)CaptureFeedbackListener

This is listener with crucial information for user about the capture. Helps to find optimal position in front of camera.

Kotlinfun onFeedback(captureFeedback: CaptureFeedback)Where CaptureFeedback is enum with self-explanatory instruction. It is covered by UISettings* by mapping these to text instructions to the user.

Errors

For every flow there is possibility to receive Error type of result. It means that something went wrong during the capture or backend communication. Fortunately, Error object contains a lot of useful information that help to handle failed flow.

Error

Parameter | Description |

|---|---|

type ErrorType | Type of an error. High level information what goes wrong. Find types description below. |

code Int | Special code dedicated for particular case. Very helpful in L2, L3 troubleshooting. |

message String | Message with error description. |

unlockDateTime Long? | Time in "UTC" time zone when capture will be unblocked. This field have value when |

failureReasons List? | List of enums containing reasons of failure. |

ErrorType

Type | Description |

|---|---|

CONNECTION_ISSUE | General issue with connection. See message and error code for more information. |

AUTHENTICATION | Backend authentication failed. Probably wrong credentials has been used for the given environment. |

INVALID_SESSION | Session ID is not correct. Most probably session expired or has been finished. |

TIMEOUT | Timeout occurred during the flow. |

BAD_CAPTURE | Capture failed. Face was not detected or liveness check did not pass. |

UNKNOWN | Unknown type of exception. Also used as default type for few cases. |

CANCELED | Flow has been canceled. Can be triggered by integrator or automatically when Lifecycle has been passed to setUp method. |

VERIFICATION | Device signature verification failed. |

INVALID_LICENSE | License validation failed. Make sure that it has been activated with LicenseManager |

DEVICE_BLOCKED | Capture on this device got blocked for period of time, because of many failures. |

LIVENESS_CHECK | Liveness verification failed during the capture. It can happen during offline capture use cases like: ActiveLivenessUseCase or PassiveLivenessUseCase |

FailureReason

Type | Description |

|---|---|

FACE_NOT_DETECTED | Face was not detected. |

INSUFFICIENT_SMILE | Smile was not detected (or was not genuine enough) during MLC capture. |

INCORRECT_FACE_POSITION | Face was not correctly positioned within the frame. |

TOO_DARK | It's too dark to perform the capture. |

TOO_BRIGHT | It's too bright to perform the capture. |

Integration of MLC capture

MultidimensionalLivenessCheck is a recommended mode for liveness capture. This capture consist of three phases:

Framing - when the user have to correctly align face within the frame.

Smile - when the user needs to smile genuinely.

Illumination - when face will be verified with a sequence of color flashes.

There are two ways of integrating this capture - with providing UiSettings, or by handling each step of the capture on your own.

Integration with using UiSettings

Using UiSettings is the easiest way of integrating MLC capture. It requires following steps:

Create session with Liveness of type MLC. See creating capture session section for more details.

Create RemoteUseCase with sessionId, EnvironmentInfo and listeners.

Kotlinval remoteUseCase = RemoteUseCase( sessionId, RemoteCaptureListeners( stepInfoListener = stepInfoListener, captureResultListener = captureResultListener, ), environmentInfo)Create mlcCaptureSettings with feedbacks specific for MLC and pass it to UiSettings object.

Call

setUpon FaceCaptureView with previously created objects:

Kotlinbinding.captureView.setUp(remoteUseCase, lifecycle, //If you don't want to start and stop capture on your own UISettings(mlcCaptureSettings = mlcCaptureSettings))After that capture will be started.

Integration based on MlcListener

The second way of handling MLC capture is to implement your own UI and logic for handling each phase of the capture. It's more complex, but allows better customization than approach with using UiSettings. This integration is based on correctly handling information coming from MlcListener. As mentioned before, MLC capture consist of three phases:

Framing - it requires correctly placing the face within the preview. To proceed, the user have to follow feedbacks coming from CaptureFeedbackListener.

Smile - starts after receiving feedback CaptureFeedback.FACE_INFO_MAKE_A_SMILE. There are three methods in MlcListener related with this phase:

onSmileStabilityChange- which can be used to track progress of whole phase,onSmileSizeChange- to show smile progress for the user (It can be done for example with using SmileIndicatorBar) andonSmileFinished- which means that smile phase ended.Illumination - during this phase preview background have to change colors according to values coming from the SDK. To properly handle this phase, you have to:

Rescale FaceCaptureView with value received from

onIlluminationPreparedmethod.Show some additional instructions for the user, for example informing about incoming color changes or necessity of moving face closer to the screen due to changed preview size.

Trigger illumination process by calling

start()onIlluminationRequestreceived fromonIlluminationDemandmethod.Adjust color of preview background to values coming from

onColorToDisplay. If you want to track progress of illumination phase, then progress will be returned inonIlluminationProgressChange(as value in range between 0.0 and 1.0). The process ends whenonIlluminationFinishedis called.

When MlcListener is implemented based on given instructions, then you must:

Create screen with FaceCaptureView which does not cover all available space - because during illumination phase user's face should be verified with sequence of a color flashes, and FaceCaptureView background have to be adjusted to colors from

onColorToDisplaycallback.Create session with Liveness of type MLC. See creating capture session section for more details.

Create RemoteUseCase with sessionId, EnvironmentInfo and listeners.

Kotlinval remoteUseCase = RemoteUseCase( sessionId, RemoteCaptureListeners( stepInfoListener = stepInfoListener, captureFeedback = captureFeedbackListener, captureResultListener = captureResultListener, mlcListener = mlcListener ), environmentInfo)Call

setUpon FaceCaptureView with previously created objects:

Kotlinbinding.captureView.setUp(remoteUseCase, lifecycle, //If you don't want to start and stop capture on your own null)Face capture - local

Creating a FaceCaptureHandler

These instructions will help you create a BioCapture handler.

Retrieve a capture handler to perform all the biometric capture operations. You must first configure the capture options. For projects that use Kotlin there are handlers from

com.idemia.smartsdk.capturethat support initializing capture preview with suspend method.

BioCaptureMode is now deprecated and should not be used during capture configuration because it will be removed in a future release.

Review the use cases named Capture biometrics.

Review all the features provided by the

BioCaputureHandlerhandler here.

val captureOptions = FaceCaptureOptions(FaceLiveness.PASSIVE).apply { camera = Camera.FRONT captureTimeout = 120 overlay = Overlay.OFF}val captureHandler = FaceCaptureHandler(context, captureOptions)Parameter | Description |

|---|---|

activity Activity | The Android activity. |

options IFaceCaptureOptions | The capture options to configure the bio capture handler. |

callbacks BioCaptureAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

Error code | Description |

|---|---|

| The application parameter is not available. |

| The graph initialization failed. |

| Initialization failed. |

| Parameters are invalid. |

| Parameter is missing. |

| Parameter size is incorrect. |

| One of the parameters is unknown. |

| Handle is invalid. |

| Java libraries are not found. |

| Java context is not set. |

| Java is unable to execute. |

| License is invalid. |

| Memory allocation issue. |

| BioCapture profile is not available. |

| BioCapture sub-profile is not available. |

| BioCapture type mismatch. |

| Unknown error |

Handlers

This section discusses the BioCapture handler, FaceCapture handler and BioMatcher handler.

BioCapture handler

You must retrieve the capture handler through the Biometric Capture SDK entry point.

Face listener

This sets the listener to receive feedback (such as when a user moves their face to the right) as shown in the snippet:

captureHandler.setFaceTrackingListener({ trackingInfo -> //Tracking info to know where the face is.})Start preview

This asynchronously starts the camera preview. It is recommended to start the capture once the preview has been initialized, as shown in the snippet:

coroutineScope.launch { handler.startPreview() handler.startCapture()}Stop preview

This stops the camera preview as shown in the snippet:

handler.stopPreview()Start capture

This starts the biometric capture as shown in the snippet.

handler.startCapture()Stop capture

This stops the biometric capture as shown in the snippet:

handler.stopCapture()Switch camera

This switches between different cameras as shown in the snippet:

handler.switchCamera(Camera.FRONT) // Use front camerahandler.switchCamera(Camera.REAR) // Use rear cameraDestroy

This releases all the handler resources as shown in the snippet:

handler.destroy()Overlay

This sets the overlay option.

handler.overlay = Overlay.OFF // Disable preview's overlayhandler.overlay = Overlay.ON // Enable preview's overlayCaptureOptions

This retrieves the capture options used in this handler as shown in the snippet:

val options: ICaptureOptions = handler.captureOptionsForce capture

This forces a capture as shown in the snippet:

handler.forceCapture()Capture handler status

Note: Check CaptureHandlerStatus.

This retrieves the status of the capture handler as shown in the snippet:

val captureHandlerStatus: CaptureHandlerStatus = handler.captureStatusFaceCapture handler

Note: It extends from BioCaptureHandler.

You must retrieve the capture handler through the Biometric Capture SDK entry point for BioCaptureHandler, as shown in the snippet:

// Get activity from applicationval activity: Activity = ...// Populate a CaptureOptions objectval captureOptions: IFaceCaptureOptions = FaceCaptureOptions(FaceLiveness.PASSIVE)captureOptions.faceLivenessSecurityLevel = FaceLivenessSecurityLevel.HIGHcaptureOptions.camera = Camera.FRONTcaptureOptions.captureTimeout = 120captureOptions.overlay = Overlay.OFFBioSdk.createFaceCaptureHandler(activity, captureOptions, object : MscAsyncCallbacks<IFaceCaptureHandler?> { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask call-back `onPreExecute` }

override fun onSuccess(faceCaptureHandler: IFaceCaptureHandler) { // Indicates that initialization succeeded, the returned handler can be used to start the capture. handler = faceCaptureHandler //handler.setTotalNumberOfCapturesBeforeDelay(-1); to disable delays between face capture failures. }

override fun onError(e: BioCaptureHandlerError) { // An error has occurred during the initialization }})Capture result listener

This sets the listener to receive the face captures. The face image callback will be fired whenever the capture is finished, as shown in the snippet:

handler.setFaceCaptureResultListener(object : FaceCaptureResultListener { override fun onCaptureSuccess(image: FaceImage) { //Capture finished with success }

override fun onCaptureFailure(captureError: CaptureError, biometricInfo: IBiometricInfo, extraInfo: Bundle) { //Capture finished with failure }})onCaptureSuccess | Called when captured is finished successfully |

|---|---|

image FaceImage | Capture face image |

FaceImage | |

|---|---|

getLivenessResult FaceLivenessResult | Resolution of capture liveness: |

getMetadata Metadata | Low level data needed for verification or debug |

FaceImage extends IImage, so it is possible to call getImageQuality() on it. However it is not recommended, as imageQuality is only available for finger capture and this method will always return -1 for FaceImage.

onCaptureFailure | Called when capture failed |

|---|---|

captureError CaptureError | Reason of capture failure |

biometricInfo IBiometricInfo | Biometric information about location and classification |

extraInfo Bundle | Holds capture extra info: capture delay date |

Use CR2D challenges

This is another type of challenges which contains target points and points controlled by the user. To use it, pass FaceLiveness.ACTIVE to the FaceCaptureOptions constructor.

This example sets CR2D in capture options as shown in the snippet:

val options: FaceCaptureOptions = FaceCaptureOptions(FaceLiveness.ACTIVE)Use passive liveness challenge

Passive mode checks for liveness without user interaction (no head movement required). As in default mode, it requires only to show the face in front of the camera so that an image can be acquired. Special algorithms estimate if a user is a real person or not. To use this mode, pass FaceLiveness.PASSIVE to FaceCaptureOptions constructor.

This example sets Passive in the capture options as shown in the snippet"

val options: FaceCaptureOptions = FaceCaptureOptions(FaceLiveness.PASSIVE)Get debug data

You can save some capture data on the user device's memory. In some cases, keeping those files might help to solve issues.

Below is an example of how to configure the debug data options. The data can be found on the SD card in the SmartSDK_debug_data directory. An example is shown in the snippet:

[...] val debugSettingsBuilder: DebugSettingsBuilder = DebugSettingsBuilder() debugSettingsBuilder.logLevel(logLevel) .storingType(DataStoringType.LAST_SESSION_ONLY) .saveCapturedImages(DebugOption.DISABLED) captureOptions.setDebugDataSettings(debugSettingsBuilder.build())Note: DataStoringType might have two values: LAST_SESSION_ONLY or MULTIPLE_SESSIONS. The first overwrites data in a single directory. The second makes a separate directory per capture.

An option exists to store special .rtv files that help you understand what is happening during a capture.

Note: Storing these files takes a lot of space. LogLevel describes what part of the logs will be saved to a file. If needed, the integrator can also save captured images by enabling saveCapturedImages option.

Set maximum captures before delay

This field sets the maximum number of captures before preventing a capture.

Values less than or equal to 0 disable the functionality. Values greater than 0 set the number of attempts before blocking.

The default value is 5 as shown in the snippet:

faceCaptureOptions.maxCapturesBeforeDelay = 5There is also a getter for this value as shown in the snippet:

faceCaptureOptions.maxCapturesBeforeDelaySet capture delay time array

This sets the array list with capture delay for failed attempts that will happen after maxCapturesBeforeDelay.

Delay for the next attempt is taken from the arrays as timeCaptureDelayArray[n maxCapturesBeforeDelay].

For all the attempts after the array length, the last item is taken as shown in the snippet:

val delayTimes: List<Long> = mutableListOf(1L, 5L, 10L, 30L, 60L)faceCaptureHandler.setTimeCaptureDelayArray(delayTimes)Get time to unlock capture

This provides information about the delay time before the user can retry (specified in seconds). A return value of 0 means that the capture is not blocked.

An example request is shown in the snippet:

faceCaptureHandler.timeToUnlockGet liveness captures attempts left before delay

This provides information about the number of captures that can be completed before delay is initialized.

It returns the number of attempts before the capture will be blocked, as shown in the snippet:

handler.captureAttemptsLeftIt returns 0 if the capture is blocked, and Int.MAX_VALUE if the capture delays are turned off.

Internal counter

Warning. For security reasons, there is also internal counter. After significant amount of failures, capture still can be delayed if this feature is not turned off.

Liveness security levels

In IFaceCaptureOptions you can set the liveness security strength. It is configured via the FaceLivenessSecurityLevel enum.

The liveness security levels are:

LOW

MEDIUM

HIGH recommended

An option exists to store special .rtv files that help you understand what happens during a capture.

Compression recommendations

Selfie images

Recommended compression is JPEG90

Size of image will be about 100 KB

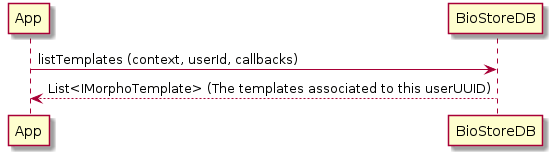

BioStore

The use of this component is optional. Its purpose is to allow the integrator to easily persist templates.

Query templates by userUUID

This lists the templates stored in the repository filtering by user.

s

BioStoreDB.listTemplates(context, userId, object : DataBaseAsyncCallbacks<List<IMorphoTemplate?>?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: List<IMorphoTemplate?>?) { //The list of templates that match the criteria. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javapublic static void listTemplates(final Context context, final UUID userId, DataBaseAsyncCallbacks<List<IMorphoTemplate>> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

userId UUID | The user identifier. |

callbacks DataBaseAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

You will receive an exception reporting the error.

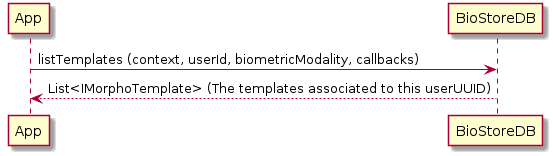

Query templates by userUUID and modality

This lists the templates stored in the repository filtering by User and BiometricModality.

BioStoreDB.listTemplates(context, userId, biometricModality, object : DataBaseAsyncCallbacks<List<IMorphoTemplate?>?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: List<IMorphoTemplate?>?) { //The list of templates that match the criteria. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javavoid listTemplates(final Context context, final UUID userId, final BiometricModality biometricModality, DataBaseAsyncCallbacks<List<IMorphoTemplate>> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

userId UUID | The user |

biometricModality BiometricModality | The BiometricModality enum option. |

callbacks DataBaseAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

You will receive an exception reporting the error.

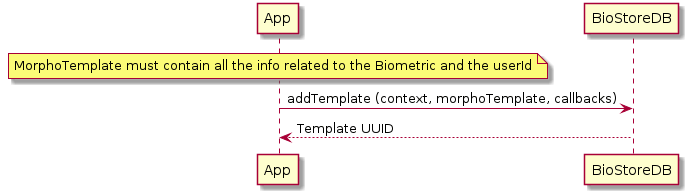

Add template

This stores a template in the repository. If there is a previous template with the same user UUID, Biometric location, and Biometric modality, it will be updated and the UUID returned.

Note: You cannot have two templates with the same configuration.

BioStoreDB.addTemplate(context, morphoTemplate, object : DataBaseAsyncCallbacks<UUID?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: UUID?) { //The template has been added and the template's uuid is returned as a parameter. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javapublic static void addTemplate(final Context context, final IMorphoTemplate template, DataBaseAsyncCallbacks<UUID> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

template IMorphoTemplate | The template to be stored. |

callbacks DataBaseAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

You will receive an exception reporting the error.

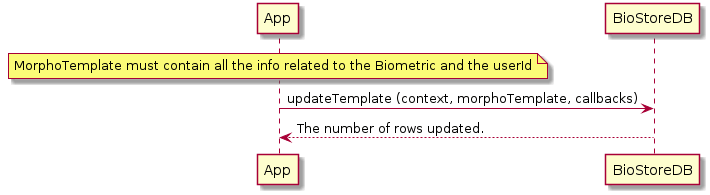

Update template

This updates a template in the repository.

BioStoreDB.updateTemplate(context, morphoTemplate, object : DataBaseAsyncCallbacks<Void?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: Void?) { //updated. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javavoid updateTemplate(final Context context, final IMorphoTemplate template, DataBaseAsyncCallbacks<Void> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

template IMorphoTemplate | The template to be updated. |

callbacks DataBaseAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

You will receive an exception reporting the error.

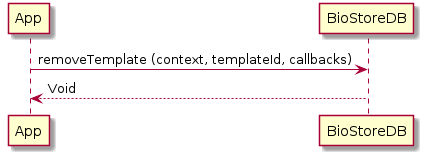

Remove template

This removes a template from the repository.

BioStoreDB.removeTemplate(context, templateId, object : DataBaseAsyncCallbacks<Void?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: Void?) { //The template was removed. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javavoid removeTemplate(final Context context, final UUID templateId, DataBaseAsyncCallbacks<Void> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

templateId UUID | The template |

callbacks DataBaseAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

You will receive an exception reporting the error.

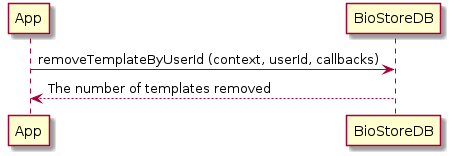

Remove templates associated to one userUUID

This removes the templates associated to the user identifier from the repository.

BioStoreDB.removeTemplateByUserId(context, userId, object : DataBaseAsyncCallbacks<Int?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: Int?) { //The number of templates removed. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javavoid removeTemplateByUserId(final Context context, final UUID userId, DataBaseAsyncCallbacks<Integer> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

userId UUID | The user |

callbacks DataBaseAsyncCallbacks | Callbacks to be executed depending on the result. |

Errors

You will receive an exception reporting the error.

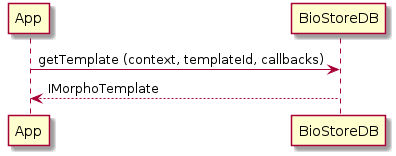

Retrieve template

This retrieves a template from the database.

BioStoreDB.getTemplate(context, templateId, object : DataBaseAsyncCallbacks<IMorphoTemplate?>() { override fun onPreExecute() { // Optional hook on the builtin Android AsyncTask callback `onPreExecute` }

override fun onSuccess(result: IMorphoTemplate?) { //The template if exists. }

override fun onError(e: Exception?) { // An error has occurred. }})Function

Javavoid getTemplate(final Context context, final UUID templateId, DataBaseAsyncCallbacks<MorphoTemplate> callbacks);Parameter | Description |

|---|---|

context Context | The Android context. |

templateId UUID | The template |